How Wells Fargo builds responsible artificial intelligence

AI should enhance how you bank, not complicate it. Take a look inside the process of how Wells Fargo engineers are making the next AI tools based on your needs.

Editor’s note: This is the second part of a Wells Fargo Stories series focused on artificial intelligence. Read part 1: “Wells Fargo, artificial intelligence, and you.” Read part 3: “How AI can shape the future of banking.”

“What we mean by human-centered AI … is how do we make our AI serve humanity in a positive way without the negative outcomes?” — James Landay, vice director of the Stanford Institute for Human-Centered AI

Key takeaways:

- Building responsible artificial intelligence requires using tested and validated data to limit bias.

- Wells Fargo’s AI tools have built-in transparency to make their decisions explainable and trackable.

- Wells Fargo is a founding member of Stanford University’s Institute for Human-Centered AI (HAI) corporate affiliate program, which has helped thousands of employees learn about AI ethics.

“With great power, there must also come great responsibility,” isn’t just a Spider-Man catchphrase. It’s how companies like Wells Fargo are approaching emerging artificial intelligence technology. The rise of AI is making daily tasks like banking easier, but when it’s not used responsibly, these new tools rightfully create suspicion. Responsible AI can change that.

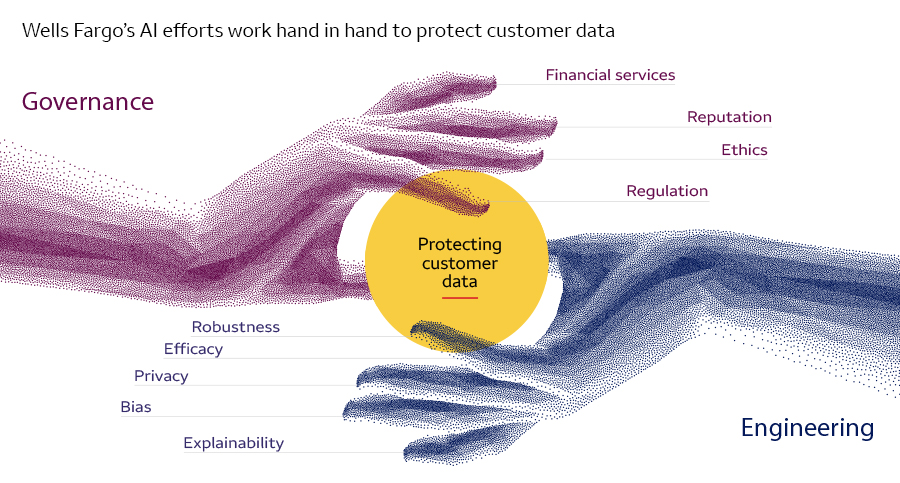

“Our responsibility to our customers is that we are fully transparent, we protect their data and their money, we give the best advice based on what we know, and we don’t cause harm,” said Chintan Mehta, Wells Fargo’s chief information officer and head of Digital Technology & Innovation. “For us, responsible AI means AI that satisfies these tenets.”

Wells Fargo’s tech experts are building trust and responsibility into AI in four ways: eliminating bias, providing transparency, offering alternatives to customers, and building partnerships designed to research and promote ethical use of AI.

1. Beating bias in AI

AI is only as good as the data it’s trained on. With limited or untested data, algorithms can learn false assumptions about various demographics, how people speak, or even where they’re located.

That’s why Wells Fargo’s technology teams have several methods for validating the data and AI algorithms they use. For example, adverse effect checks compare AI models for testing to tease out potential biases, and then developers go back to check and correct algorithms.

Beyond that, independent groups review AI-based products and experiences at multiple development points to make sure they’re ready for customers.

“Even though it’s a heavy lift, this process of testing and validating helps us keep up as new models and new techniques come along,” Mehta said.

2. No rogue AI

Transparency is built into each stage of AI development. For example, knowing how and why AI makes a decision should be clear. Wells Fargo developers use open-sourced platforms with built-in decision-making transparency and data mapping. Because of that, Wells Fargo AI models can’t “hallucinate” — a phenomenon typical of large chatbot language models where AI creates inaccurate, contradictory, or nonsensical information for seemingly no reason.

“You never let an AI neural network reach conclusions without knowing why,” Mehta said. “The data we use is extremely explainable and has a lineage that we can track.”

3. You can use alternative options

While AI is designed to make banking easier, it’s not how every customer wants to bank. For those customers, Wells Fargo always offers an alternative to any AI-based product or service, such as Fargo, the virtual assistant built into the Wells Fargo Mobile app.

“Wells Fargo offers options for customers to engage using tools and services that are the right fit for them personally,” Mehta said.

“With AI, customers are getting faster, more intuitive experiences than they would have otherwise had.” — Chintan Mehta, chief information officer and head of Digital Technology & Innovation

4. Partnership and research for ethical AI

To help the company build better AI, Wells Fargo recently became a founding member of the corporate affiliate program at Stanford University’s Institute for Human-Centered AI, or HAI. So far, more than 4,000 Wells Fargo employees have taken part in the program, which brings companies together to share expertise, learn about AI ethics, and fund research.

“The program helps us by connecting with companies that are actually experiencing the problems and potentials of AI in their own businesses,” said James Landay, vice director of HAI and a professor in Stanford’s engineering and computer science departments.

This collaboration is more important than ever. Reports of AI misuse have risen every year for the past decade, likely because more and more people are learning about AI and how to use it unethically, according to HAI’s annual Artificial Intelligence Index Report (PDF).

What irresponsible AI looks like can be confusing. Often, people associate AI with the futuristic machines in science fiction TV and movies, Landay said. To combat misuse, more consumer education, regulation, and ethical cases of AI are needed.

“The real dangers of AI are not that AI takes over the world. The real dangers of AI are the dangers that are already here,” he said. “What we mean by human-centered AI … is how do we make our AI serve humanity in a positive way without the negative outcomes?”

The aim of Wells Fargo’s transparency and safety infrastructure is to ensure AI helps customers bank quicker and easier and earn their trust.

“With AI, customers are getting faster, more intuitive experiences than they would have otherwise had,” Mehta said. “We’re doing all we can to be thoughtful about how we’re building AI.”

See how Wells Fargo is leading the way in Technology

Wells Fargo, artificial intelligence, and you

5 big questions on quantum computing answered

Quantum computing is here. Wells Fargo is working with key tech partners to be quantum ready.